Bluesky 2023 Moderation Report

January 16, 2024

by The Bluesky Team

Safety is core to social media. Transparency is essential to building trust in moderation efforts, and the data we are providing here is a first step towards full transparency reports. We hope these early disclosures provide some visibility into our behind the scenes operations, and we intend to deliver more granular data and reporting in the future. Given the transparent nature of the network, independent researchers also already have direct access to relatively rich data on labeling and moderation interventions.

High-Level Overview

Over the past year, the Bluesky app has grown from a handful of friends and developers to over 3 million registered accounts. We have hired and trained a full-time team of moderators, launched and iterated on several community and individual moderation features, developed and refined policies both public and internal, designed and redesigned product features to reduce abuse, and built several infrastructure components from scratch to support our Trust and Safety work.

We’ve made a lot of progress, and we know we still have a long way to go to deliver on the vision we set out — including composable moderation, mute words, temporary account deactivation, and collaborative programs to combat misinformation (such as Community Notes). We will have more to share in coming weeks! But first here is an overview of 2023.

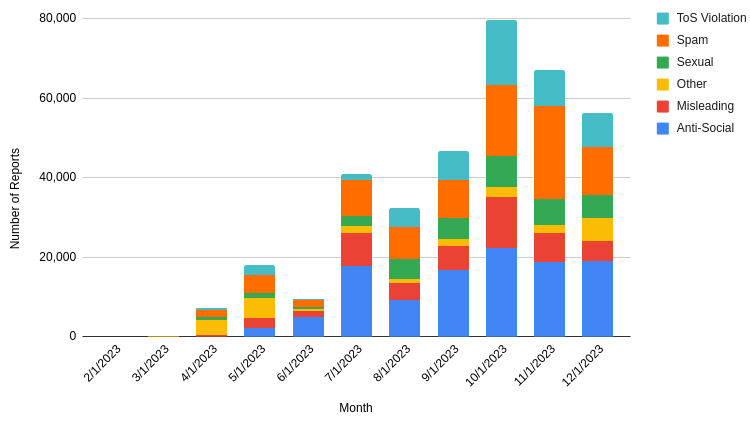

Reporting By The Numbers

One of the most obvious and visible forms of moderation on Bluesky is the report-and-review feedback loop. Any user can report content such as posts, accounts, or lists through the app. We then review reported content and behavior against both the Community Guidelines and Terms of Service, and take action when appropriate.

Our team of proactive and very-much-appreciated full-time moderators have provided around-the-clock coverage since spring. We’re doing things differently than a lot of social media sites, and hired our moderators directly, rather than relying on a third-party vendor. While we might use automated tools to handle reports in the future, right now every single report filed has been reviewed by humans, with no automated resolution. In 2023 we reviewed 358,165 individual reports.

A relatively small fraction of accounts was responsible for creating the majority of reports, and separately a small fraction of accounts received the majority of reports. In total 76,699 individual accounts (5.6% of our active users) created one or more reports, and 45,861 individual accounts (3.4% of our active users) received one or more reports (either on the overall account, or on a piece of content created by the account).

When we take action on a report, our interventions have ranged from sending warning emails, to labeling, to takedowns of content or entire accounts. Takedowns can be either temporary suspensions or take effect permanently. While we used all of our interventions over the course of 2023, this post only includes a breakdown of takedowns: 4,667 accounts taken down, and an additional 1,817 individual pieces of content taken down.

In addition to reports from the community, we have a number of automated tools to proactively detect suspicious or harmful conduct without a user first reporting it to the moderation team, such as slurs in handles and spam accounts. Some of these tools take direct action on visual content by applying labels like "gore" to violent imagery, while others simply file automated reports for human review. Users can appeal labels applied to their account through the same in-app reporting flow, which the moderation team will then take another look at. The numbers above do not include the large volume of reports created by our own automated systems.

Child sexual abuse and exploitation, including the distribution of child sexual abuse material (CSAM), is obviously unacceptable anywhere on the Internet, including Bluesky. We built AT Protocol and Bluesky to ensure we can rapidly identify and remove this content anywhere it appears on our network, even when federation becomes available in the future. To help proactively detect, take down, review, and report CSAM to the applicable authorities (including NCMEC in the United States), we partnered with an established non-profit vendor who provides both hash matching APIs and specialist web apps for review and reporting. While any instance of this behavior is dangerous and warrants prompt action, to date we have thankfully not yet encountered a significant volume of such content in the network. However, our systems proactively identified four instances of potential CSAM, of which two were statistical false-positives, and two were confirmed to meet reporting requirements and were reported to NCMEC.

For this report, we are not yet including counts of legal requests and the fraction of requests serviced, nor are all of our moderation actions (such as labels) fully represented in the numbers we’ve shared here. We look forward to providing more granular data and reporting in the future. Given the transparent nature of the network, independent researchers also already have direct access to relatively rich data on labeling and moderation interventions.

Interaction Controls

No amount of reactive moderation will help if the base design of a product rewards abuse or inflames inter-community conflict. In 2023, we added a number of app and protocol features that give individuals and groups more control over their interactions in the network, with the aim of building safety options into the design of Bluesky itself.

Moderation lists can be used for muting and blocking. Individuals can maintain curated lists of accounts for an entire community, reducing duplicated efforts. Lists themselves are always public, and the behaviors of lists match the privacy of individual-level actions: blocking a list is public, while muting a list is private. Like all technical safety features, lists have both advantages and disadvantages as a moderation primitive. They can be a vector for harassment and abuse (and thus themselves require moderation), become difficult to maintain at larger scale, and have no built-in affordances for collaborative control or accountability. On the other hand, they are relatively familiar and easy to understand, have a low barrier to adoption, and integrate easily with everyday use of the app.

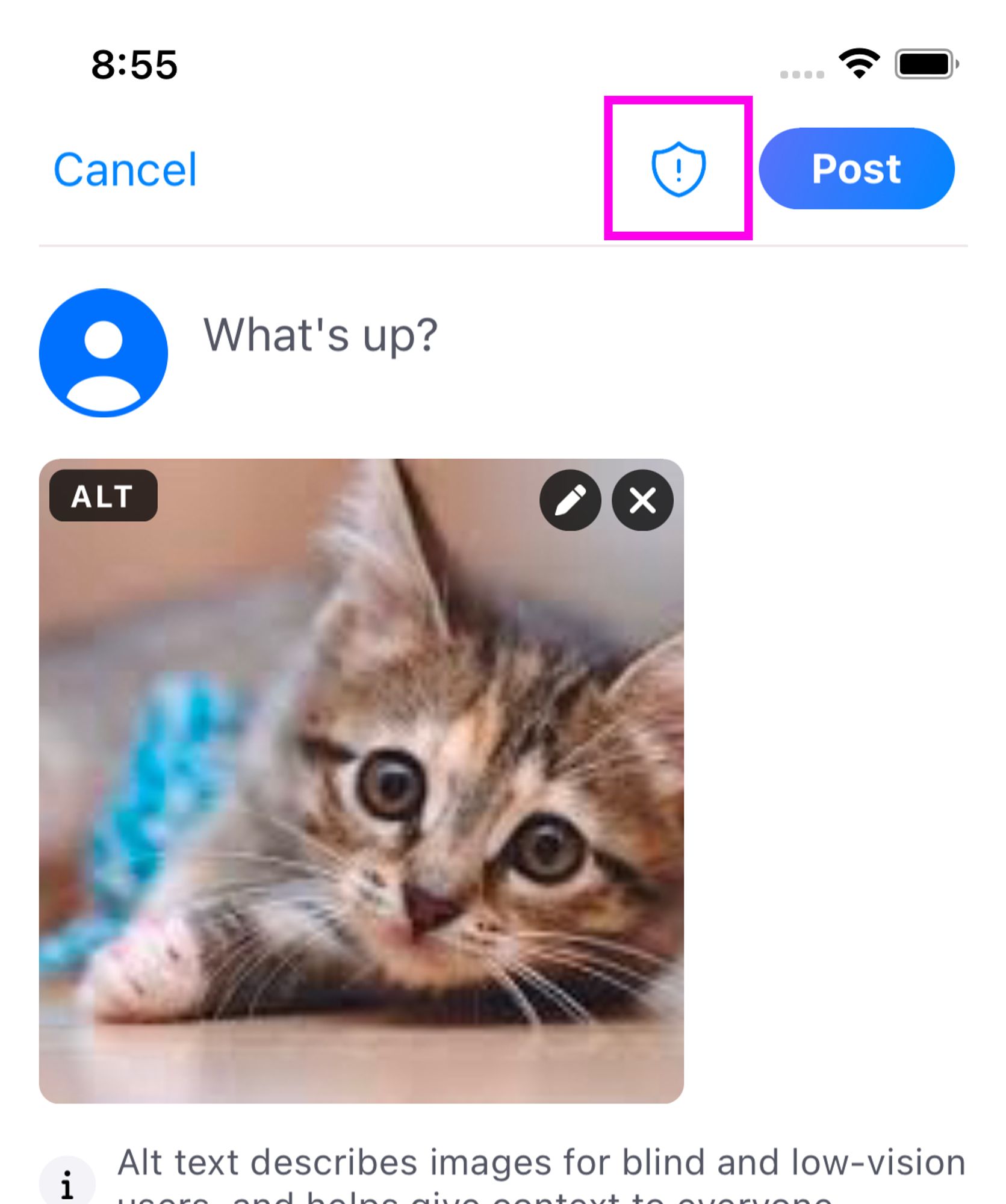

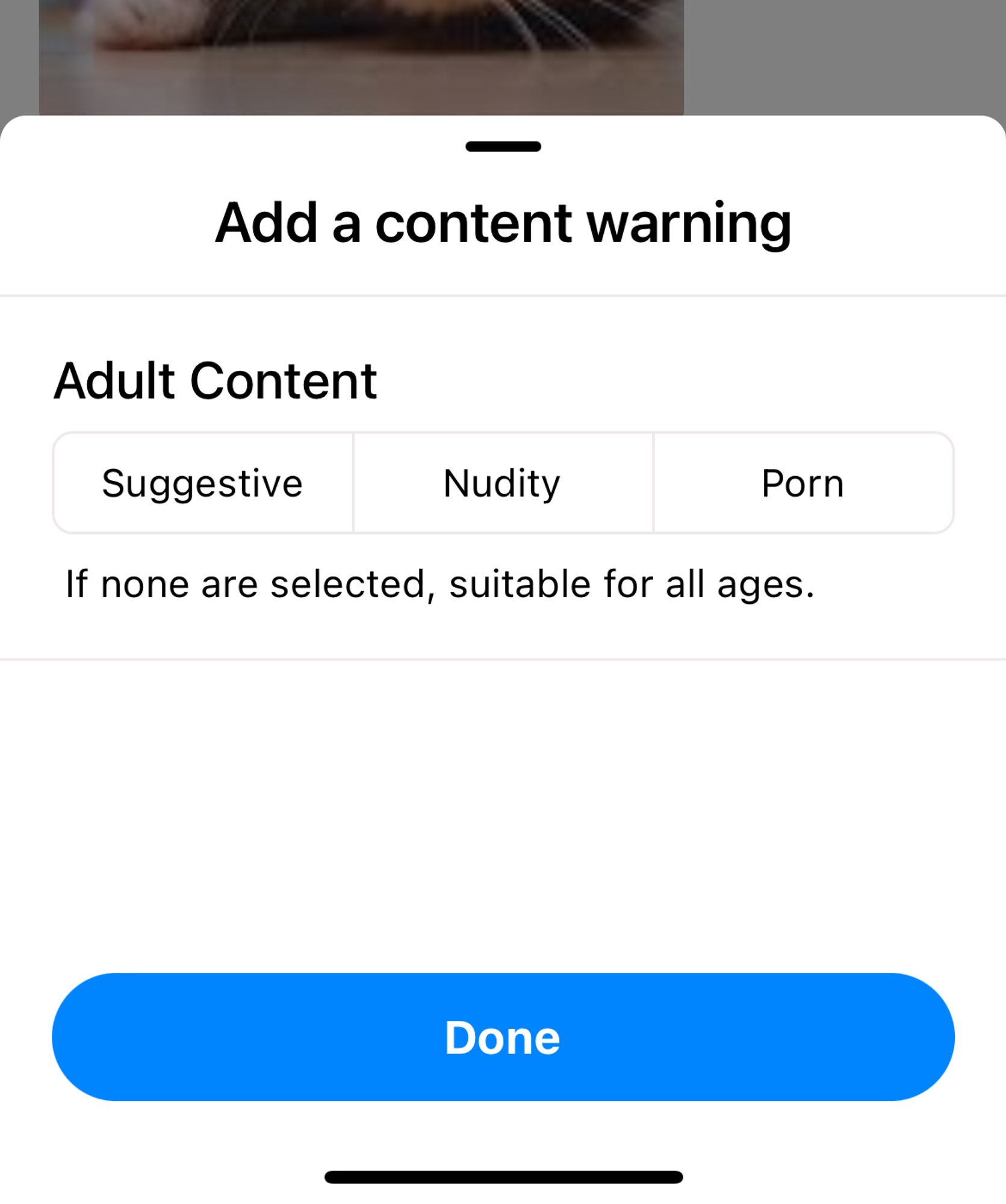

Self-labeling of individual posts lets authors ensure appropriate content warnings are in place for viewers. This feature is currently limited to posts with visual images and adult content, but there is a clear path to expanding the scope to more labels.

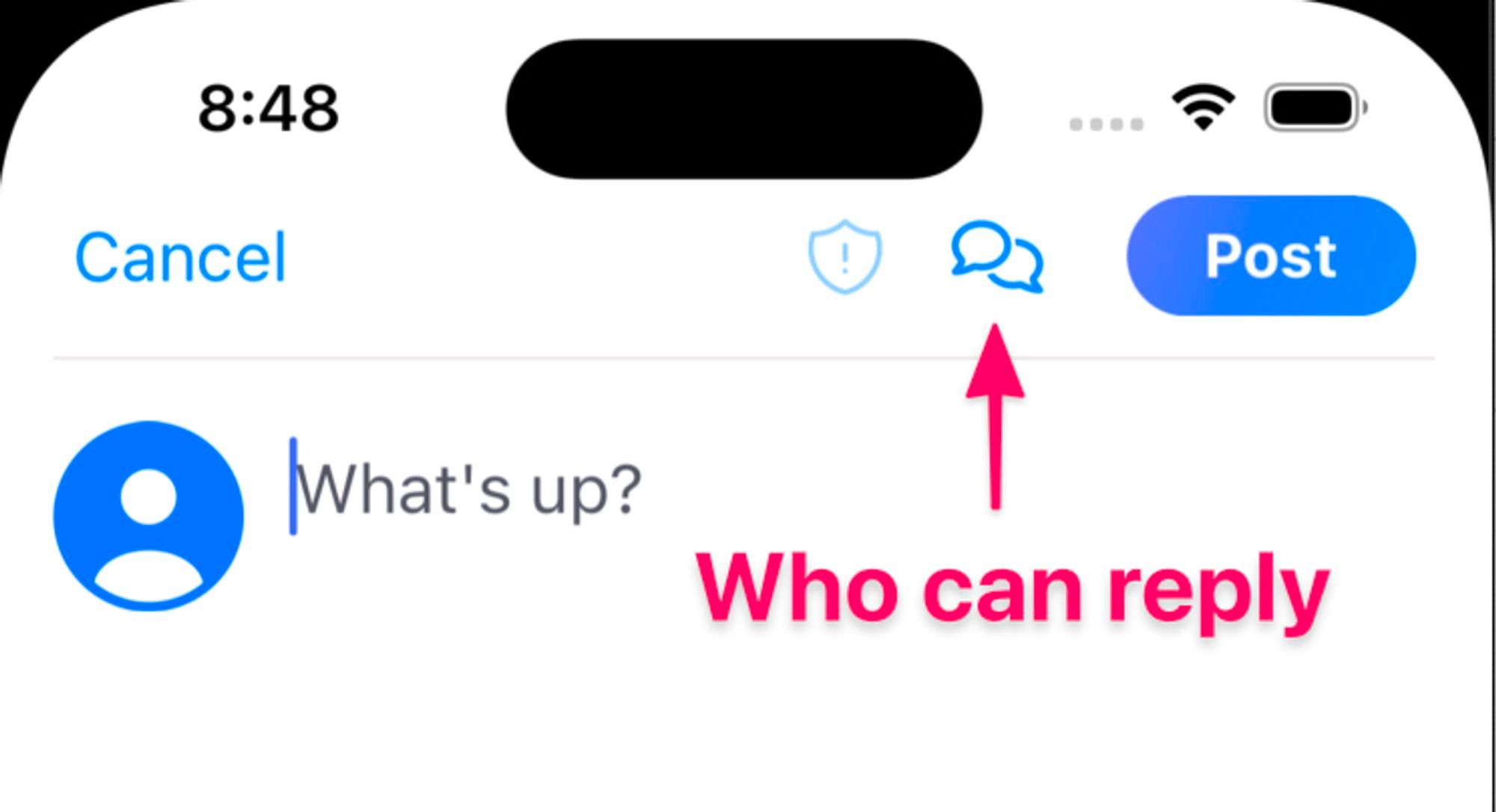

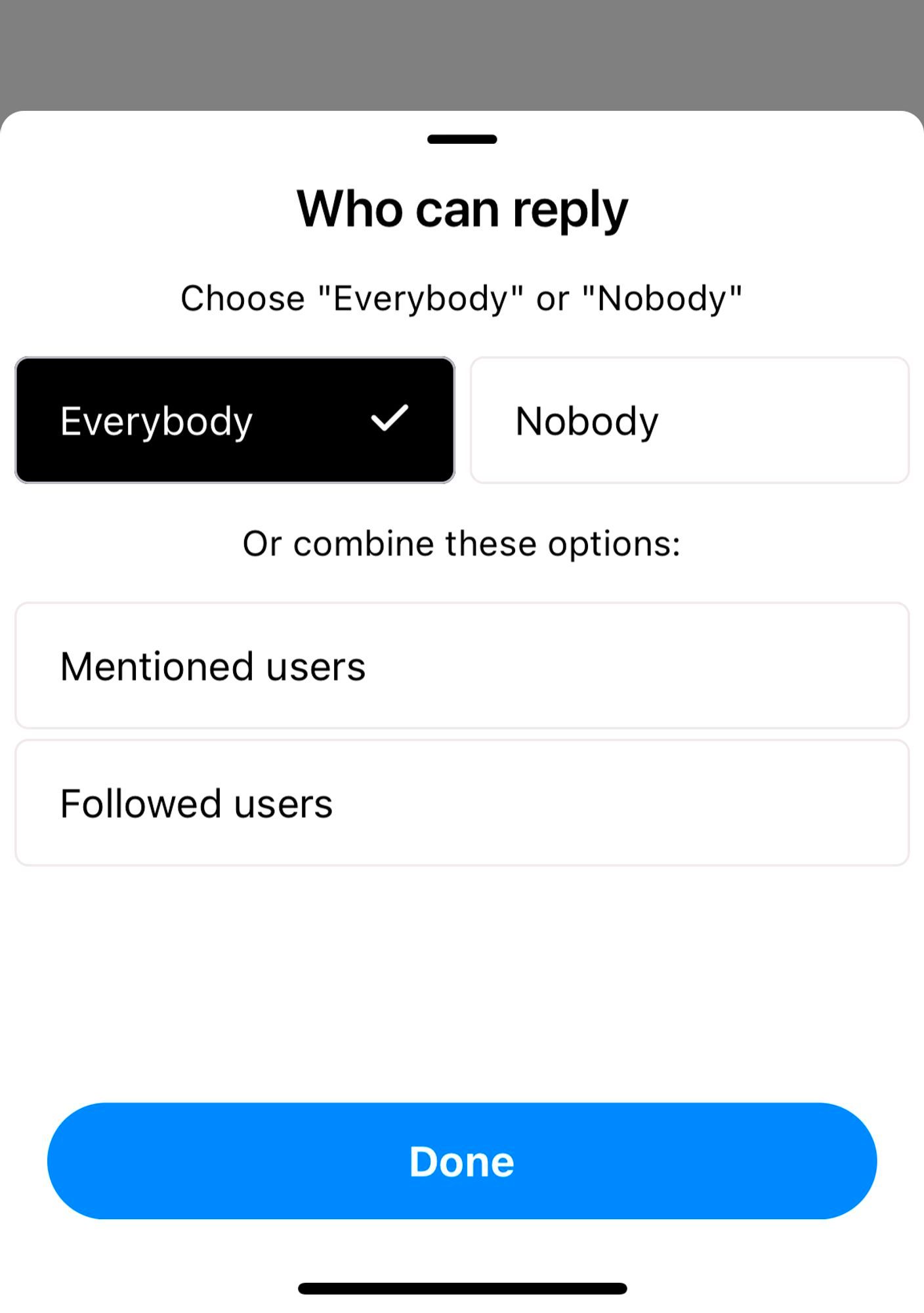

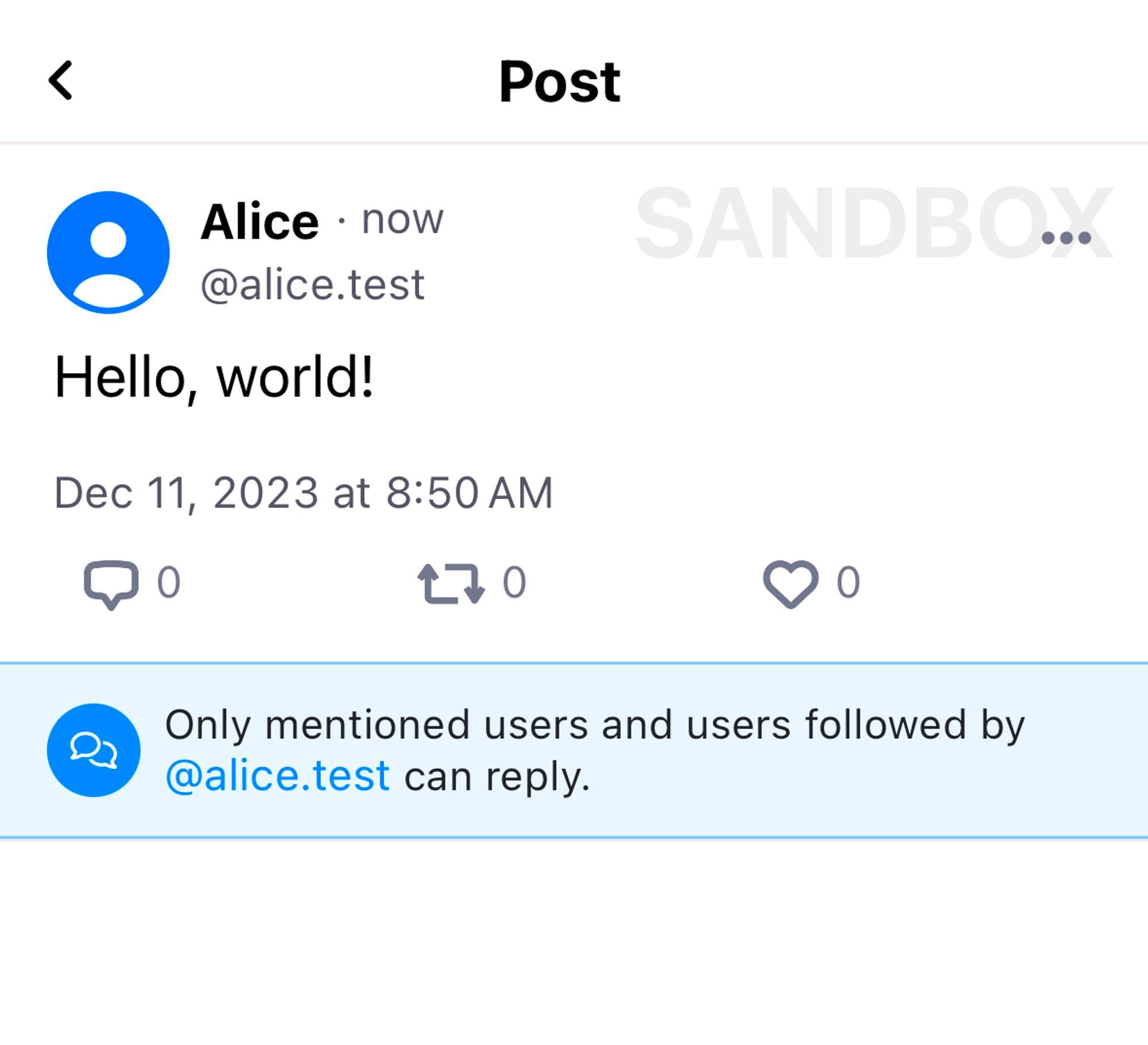

Reply controls give authors choice over who can interact in their threads, including the ability to close all replies to a post.

There are several more basic interaction controls in development or on the horizon. The ability to hide individual posts and to appeal labeling decisions on your own posts are both available now as beta-quality features. We are also tracking requests for the ability to mute keywords, and for users to be able to temporarily deactivate their own account. We’ll share more about these planned features in the coming months.

Supporting Tools and Infrastructure

Trust and safety efforts rely on tooling — and a lot of our work has been building the supporting tooling and infrastructure for this work from scratch. We’ve made a lot of progress in 2023 behind the scenes to support our moderation teams and policies.

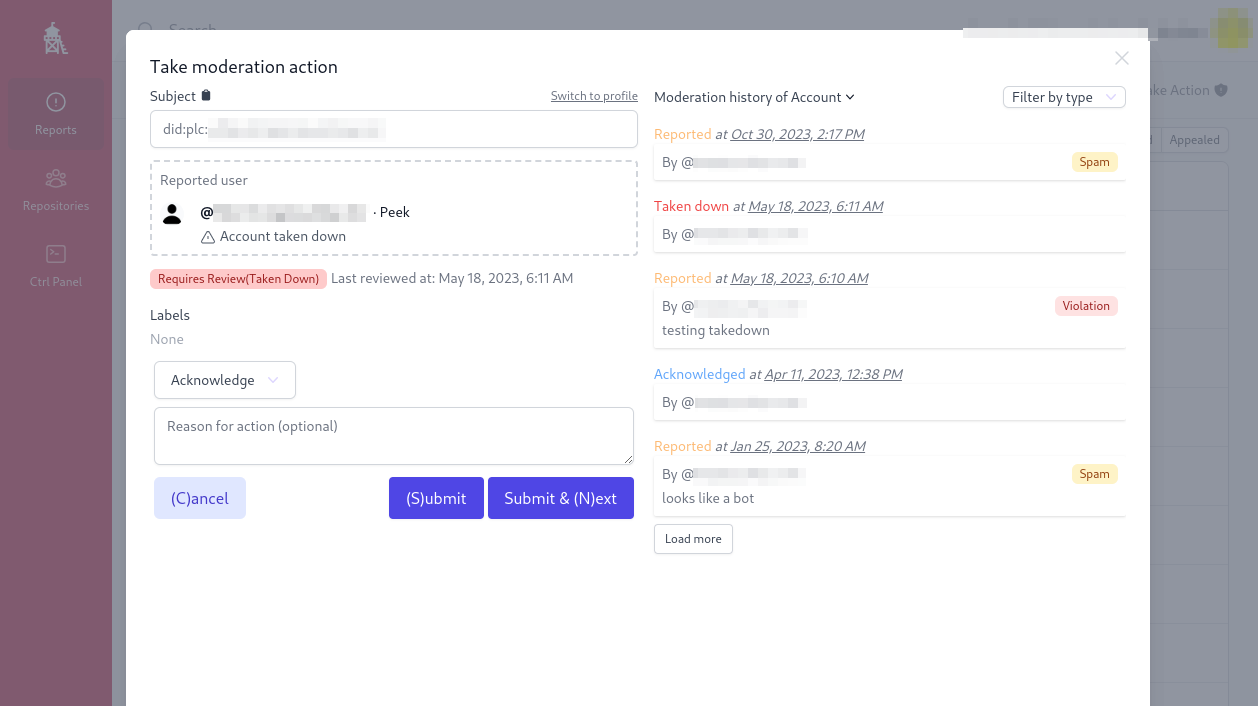

Our moderation review tools have gone through major refactors and workflow improvements over the year, based on feedback from our moderators and policy experts. What started as a minimal admin interface can now handle tiered review queues, templated email workflows, moderation event timelines, and more. From a technical perspective, the APIs driving this system, both reporting and review, are defined the same way as other atproto endpoints, which supports interoperability with other alternative clients and workflow systems. We named the front-end interface of the moderation review system Ozone, and we plan to open-source it soon. We’re also in the process of pulling out the moderation back-end as a standalone service that other organizations can self-host.

Lastly, we have developed a rule-based automod framework to augment our moderation team when battling spam, and to proactively flag unacceptable content before it gets seen and reported by human users. This has helped identify some patterns of engagement farming and spam, and we will continue to develop and refine abuse detectors as we move towards open federation and growing the network.