Updates to Our Moderation Process

November 19, 2025

by The Bluesky Team

Our community has doubled in size in the last year. It has grown from a collection of small communities to a global gathering place to talk about what’s happening. People are coming to Bluesky because they want a place where they can have conversations online again. Most platforms are now just media distribution engines. We are bringing social back to social media.

On Bluesky, people are meeting and falling in love, being discovered as artists, and having debates on niche topics in cozy corners. At the same time, some of us have developed a habit of saying things behind screens that we'd never say in person. To maintain a space for friendly conversation as well as fierce disagreement, we need clear standards and expectations for how people treat each other. In October, we announced our commitment to building healthier social media and updated our Community Guidelines. Part of that work includes improving how users can report issues, holding repeat violators accountable and providing greater transparency.

Today, we're introducing updates to how we track Community Guidelines violations and enforce our policies. We're not changing what's enforced - we've streamlined our internal tooling to automatically track violations over time, enabling more consistent, proportionate, and transparent enforcement.

What's Improving

As Bluesky grows, so must the complexity of our reporting system. In Bluesky’s early days, our reporting system was simple, because it served a smaller community. Now that we’ve grown to 40 million users across the world, regulatory requirements apply to us in multiple regions.

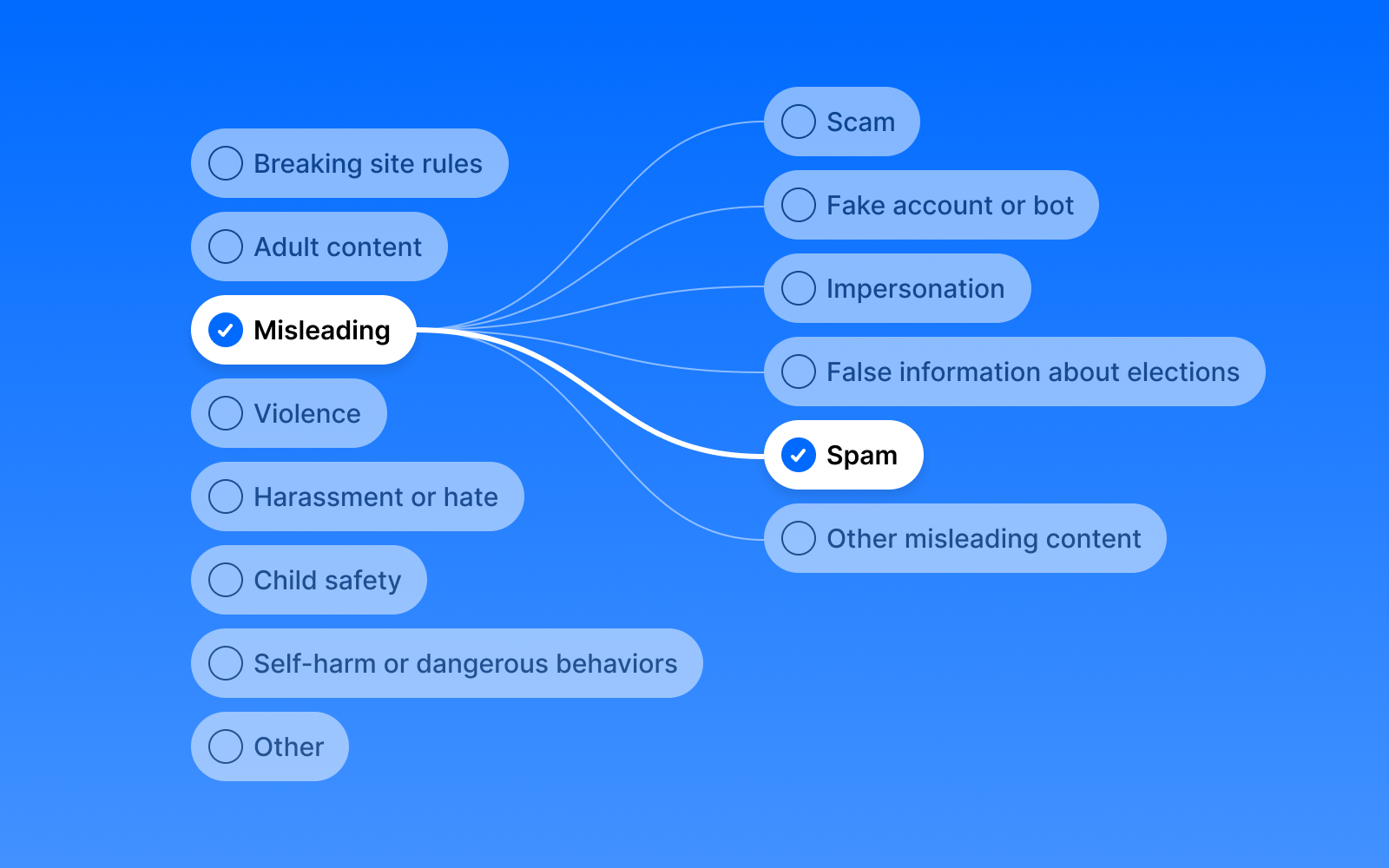

To meet those needs, we’re rolling out a significant update to Bluesky’s reporting system. We’ve expanded post-reporting options from 6 to 39. This update offers you more precise ways to flag issues, provides moderators better tools to act on reports quickly and accurately, and strengthens our ability to address our legal obligations around the world.

Our previous tools tracked violations individually across policies. We've improved our internal tooling so that when we make enforcement decisions, those violations are automatically tracked in one place and users receive clear information about where they stand.

This system is designed to strengthen Bluesky’s transparency, proportionality, and accountability. It applies our Community Guidelines, with better infrastructure for accurate tracking and to communicate outcomes clearly.

In-app Reporting Changes

Last month, we introduced updated Community Guidelines that provide more detail on the minimum requirements for acceptable behavior on Bluesky. These guidelines were shaped by community feedback along with regulatory requirements. The next step is aligning the reporting system with that same level of clarity.

Starting with the next app release, users will notice new reporting categories in the app. The old list of broad options has been replaced with more specific, structured choices.

For example:

- You can now report Youth Harassment or Bullying, or content such as Eating Disorders directly, to reflect the increased need to protect youth from harm on social media.

- You can flag Human Trafficking content, reflecting requirements under laws like the UK’s Online Safety Act.

This granularity helps our moderation systems and teams act faster and with greater precision. It also allows for more accurate tracking of trends and harms across the network.

Strike System Changes

When content violates our Community Guidelines, we assign a severity rating based on potential harm. Violations can result in a range of actions - from warnings and content removal for first-time, lower-risk violations to immediate permanent bans for severe violations or patterns demonstrating intent to abuse the platform.

Severity Levels

- Critical Risk: Severe violations that threaten, incite, or encourage immediate real-world harm, or patterns of behavior demonstrating intent to abuse the platform → Immediate permanent ban

- High Risk: Severe violations that threaten harm to individuals or groups → Higher penalty

- Moderate Risk: Violations that degrade community safety → Medium penalty

- Low Risk: Policy violations where education and behavior change are priorities → Lower penalty

Account-Level Actions

As violations accumulate, account-level actions escalate from temporary suspensions to permanent bans.

Not every violation leads to immediate account suspension - this approach prioritizes user education and gradual enforcement for lower-risk violations. But repeated violations escalate consequences, ensuring patterns of harmful behavior face appropriate accountability.

In the coming weeks, when we notify users about enforcement actions, we will provide more detailed information, including:

- Which Community Guidelines policy was violated

- The severity level of the violation

- The total violation count

- How close the user is to the next account-level action threshold

- The duration and end date of any suspension

Every enforcement action can be appealed. For post takedowns, email moderation@blueskyweb.xyz. For account suspensions, appeal directly in the Bluesky app. Successful appeals undo the enforcement action – we restore your account standing and end any suspension immediately.

Looking Ahead

As Bluesky grows, we’ll continue improving the tools that keep the network safe and open. An upcoming project will be a moderation inbox to move notifications on moderation decisions from email into the app. This will allow us to send a higher volume of notifications, and be more transparent about the moderation decisions we are taking on content.

These updates are part of our broader work on community health. Our goal is to ensure consistent, fair enforcement that holds repeat violators accountable while serving our growing community as we continue to scale.

As always, thank you for being part of this community and helping us build better social media.